Rate limiting: Not as simple as you might think

Firstly, some background info. Every socket.BeginReceive() i call downloads data in 2kB chunks. By receiving 2kB at a time, i can get more accurate download speeds recorded and (hopefully) more accurate rate limiting. Download speeds are averaged over 8 seconds with data recorded each second. This provides a resonably stable but accurate download rate. A torrents download speed is calculated by summing up the download speed of each active client.

Method 1:

while(torrentManager.DownloadSpeed() < this.MaxDownloadSpeed)

torrentManager.StartAPeerDownloading();

This method seems like it would be a good way to limit download speed, except it fails miserably in areas where there is high bandwidth available. I.e. in a LAN situation, due to the fact that i only update peer download speeds every second, if a LAN client could easily transfer 1-2MB in that second (call it second[0]), so if my rate limit was 100kB/sec, the client would then stop downloading chunks off all peers until the 8 second averaging period has passed, and so it thinks that download speed is 0. Then the cycle starts over again and the algorithm could request another 2 megabytes in the first second, and then be followed by an additional 8 seconds of nothing.

Method 2:

Keep a count of how many 2kB chunks i transfer per second: chunksCount;

At the end of every second, after i calculate the current download speed, i perform the following calculation:

downloadSpeed = torrentManager.DownloadSpeed();

// This is the difference in speed between what i'm currentl doig and what i should be doing.

// A negative number means i'm going to fast. If 20480 is returned, it means i'm 20kB/sec

// to slow

speedDifference = torrentManager.MaxDownloadSpeed - downloadSpeed;

// The number of additional chunks i need to download in order to reach my rate limiting

// target is the speedDifference / chunkSize. i.e. 20480 / 2048 = 10 chunks extra per second

chunksCount+= speedDifference / 2048;

This tells me how many chunks i need to send this (and every) second to reach my quota. So i can then run code like:

while(chunksSent++ < ChunksCount)

torrentManager.StartAPeerDownloading();

However this runs into problems that if i overshoot download speed (due to inaccurate MaxDownloadSpeed for the same conditions as mentioned in method 1) i end up with a hugely oscillating chunksCount. This algorithm suffers a similar problem to the one above in high bandwidth situations, but not to the same extent.

Method 3:

Similar to above, but with a slight twist.

Assume every chunk i try to download is exactly 2048 bytes (not always true). Therefore the "ideal" number of chunks to download a second is:

int idealNumberOfChunks = torrentManager.MaxDownloadSpeed/2048;

Then take a double called "multiplyAmount" and initially set it equal to 1.0.

What i do now is at the end of every second i do the following:

// I'm going to fast, so i need to reduce my multiply amount

if(torrentManager.DownloadSpeed > this.MaxDownloadSpeed)

multiplyAmount *= 0.95;

// I'm going to slow, i need to increase my multiply amount

else

multiplyAmount *= 1.05;

ChunksCount = idealNumberOfChunks * multiplyAmount;

Basically what i do at the end of every second is see if i'm going to slow or too fast. If i'm going to slow, i increase my multiply amount (which retains it's value between calls to my method). So if i'm consistantly too slow, my multiplyAmount will slowly increase from 1.0 right up until 5.0 (which is a hardcoded max for multiplyamount).

What this should result in is that the ChunksCount will initially start off equal to the idealNumberOfChunks but if i'm going too slow, it'll request more and more chunks above the ideal amount, but if i'm going to fast, it'll start requesting less and less chunks. Unfortunately this algorithm didn't really work either. It tends to go too fast, then too slowly, then too fast. This is caused by MultiplyAmount increasing and decreasing every second. But it is by far the best method so far (and the one i've currently left implemented).

Ratelimiting is definately not such a simple thing to do. Even keeping track of the DownloadSpeed is tricky, with my code occasionally running riot and calculating 100kB/sec when actual rate is 50kB/sec.

So if anyone has any ideas on how to implement some accurate ratelimiting, that'd be great! Or if my badly explained ideas don't make sense, ask me to explain it a bit better.

Tuesday, October 31, 2006

Monday, October 30, 2006

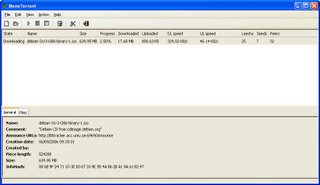

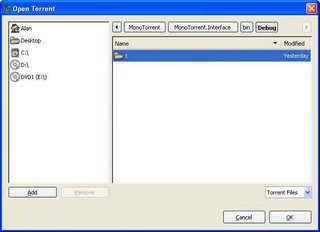

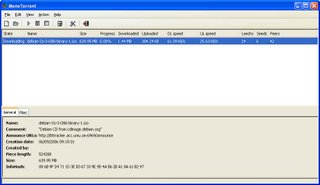

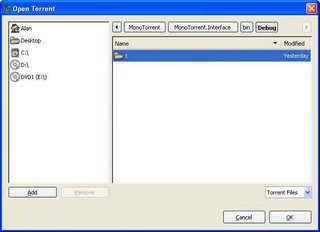

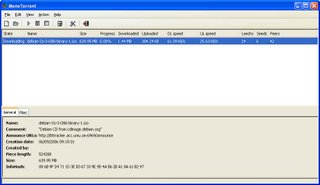

Yet another piece of good news, thanks to the patch by Kevin, a little bit of hacking and some help from the #mono channel, piotrs' GUI is now compiling and starting up.

Ok, so there are a few minor (note: When i say "minor" i mean the whole GUI vanishing without a trace before your eyes ;)) bugs in it which i have yet to work through, but all going well i'll have them ironed out in a few days. But i am extremely tempted to rip apart the existing design (which seems a little overcomplicated) and start afresh using it as a template. It looks nice enough (if basic), but far too much info is hardcoded. For example, there's no way to choose where to save a .torrent to! They'll always go to your $personal$ folder (i.e. my documents on windows).

So all going well i'll have a Pre-Beta 1 release coming out soon of the monotorrent client library plus GUI. The only major thing left to do is re-implement my RateLimiting code. While the previous code worked, it had too much of a dependancy on timers, and so created artificial limits on download speed. I have a few ideas, but im not quite sure how it'll all pan out.

I'll leave ye with a few screenshots:

Ok, so there are a few minor (note: When i say "minor" i mean the whole GUI vanishing without a trace before your eyes ;)) bugs in it which i have yet to work through, but all going well i'll have them ironed out in a few days. But i am extremely tempted to rip apart the existing design (which seems a little overcomplicated) and start afresh using it as a template. It looks nice enough (if basic), but far too much info is hardcoded. For example, there's no way to choose where to save a .torrent to! They'll always go to your $personal$ folder (i.e. my documents on windows).

So all going well i'll have a Pre-Beta 1 release coming out soon of the monotorrent client library plus GUI. The only major thing left to do is re-implement my RateLimiting code. While the previous code worked, it had too much of a dependancy on timers, and so created artificial limits on download speed. I have a few ideas, but im not quite sure how it'll all pan out.

I'll leave ye with a few screenshots:

Sunday, October 29, 2006

Well well well.

MonoTorrent got a speed boost.

Download speeds have gone from ~ 30kB/sec to ~4.5MB/sec (and probably faster!). The only bad thing is that i've had to disable my rate limiting code completely until i can come up with a way of writing the code that won't kill download speeds. It's not so simple!

Memory usage is down to single digit figures aswell, less than 10 megabytes! So it looks like i well achieved my aim of creating a cross platform client that uses less than 1/3 the ram that Azureus uses. The only thing i need now is a nice GUI for it. I got a patch for the GUI that was created during the summer, and i hacked a little at it myself, so now it compiles. I'll take a more indepth look at it later on and see if i can nice it up a bit (and reduce it's memory usage, if possible).

Just a little eye candy for ye to enjoy: http://picasaweb.google.com/alan.mcgovern/ There's a few images up there where i screenshotted download speed/upload speed and memory usage.

DebianTorrent: This is a real world torrent. So performance in this test is exactly how you'd expect in real life (i'm on a 3meg connection btw).

DownloadingMonoTorrent: I used both Azureus and uTorrent as "seeders" on my local machine to see how fast monotorrent would download. Azureus maxes out at ~1.6 MB/sec upload, uTorrent maxes out at about 3-3.2MB/sec, so i don't really know the limits of my code ;) My best guesstimate based on the way i've coded the logic sections would be a theoretical max of: pieceLength*2*(1000/25) kB/sec. i.e. i can request all the blocks for two pieces every 25 miliseconds. If my maths is right, that's approximately a limit of 20MB/sec per peer. The library should be able to upload as fast as your harddrive can seek ;)

UploadingMonoTorrent: I started monotorrent off at 100% and then set Azureus and uTorrent to start at 0% and leech. Bear in mind there would be a little crosstalk between Azureus and uTorrent. They did share some information between themselves.

MonoTorrent got a speed boost.

Download speeds have gone from ~ 30kB/sec to ~4.5MB/sec (and probably faster!). The only bad thing is that i've had to disable my rate limiting code completely until i can come up with a way of writing the code that won't kill download speeds. It's not so simple!

Memory usage is down to single digit figures aswell, less than 10 megabytes! So it looks like i well achieved my aim of creating a cross platform client that uses less than 1/3 the ram that Azureus uses. The only thing i need now is a nice GUI for it. I got a patch for the GUI that was created during the summer, and i hacked a little at it myself, so now it compiles. I'll take a more indepth look at it later on and see if i can nice it up a bit (and reduce it's memory usage, if possible).

Just a little eye candy for ye to enjoy: http://picasaweb.google.com/alan.mcgovern/ There's a few images up there where i screenshotted download speed/upload speed and memory usage.

DebianTorrent: This is a real world torrent. So performance in this test is exactly how you'd expect in real life (i'm on a 3meg connection btw).

DownloadingMonoTorrent: I used both Azureus and uTorrent as "seeders" on my local machine to see how fast monotorrent would download. Azureus maxes out at ~1.6 MB/sec upload, uTorrent maxes out at about 3-3.2MB/sec, so i don't really know the limits of my code ;) My best guesstimate based on the way i've coded the logic sections would be a theoretical max of: pieceLength*2*(1000/25) kB/sec. i.e. i can request all the blocks for two pieces every 25 miliseconds. If my maths is right, that's approximately a limit of 20MB/sec per peer. The library should be able to upload as fast as your harddrive can seek ;)

UploadingMonoTorrent: I started monotorrent off at 100% and then set Azureus and uTorrent to start at 0% and leech. Bear in mind there would be a little crosstalk between Azureus and uTorrent. They did share some information between themselves.

Wednesday, October 25, 2006

Since the SoC ended i've been hard at work at both college and life, so coding time has been severely reduced. Nonetheless i have managed a few good things and a few bad things for the library ;)

For the good things: I now have memory usage in the range of 10-13 megabytes with 3 torrents running simultaenously. There's still one or two more optimisations that i have left to try. I'm at the level where calling DateTime.Now is responsible for 30% of my ongoing allocations! Ok, so i am calling it ~ 400 times a second, but that has nothing to do with it ;) Bug fixes have been flying in and most importantly (imo) i have received my first patches from other people! Yes, other people have sent me patches for MonoTorrent, amazing stuff.

The bad things: I've found some serious bugs in MS.NET which were the root cause behind the mysterious freezing i used to get in client library. What happens is that sometimes an Asynchronous call to socket.BeginReceive would actually be completed synchronously. "But thats good, it saves the computer from starting up another thead" you might think, but you'd be wrong!

My code was built on the fact that an asynchronous call was asynchronous. So, the problem is this. When dealing with multithreaded code you have to make sure that a different thread doesnt modify/delete data that your main thread is working on. To do this, you must put a "lock" on the item you're working on. Then when Thread 2 sees the lock, it wont do anything until Thread1 is finished and releases its' lock. Problems arise when Thread1 locks on item A and wants to lock onto B while Thread2 already has a lock on B but wants a lock on A. In this scenario, neither thread can go forward and so your application locks up.

Unfortunately this is exactly what happens when my socket call is completed synchronously. Thank microsoft for such a nice undocumented feature.

Finally in bad news: Download speeds are gone to crap. I'm going to have to remove my rate limiting code and get everything working hunky dorey before i reimplement rate limiting. My whole locking structure needs to be reviewed in light of the above mentioned bug. I've put in a temporary fix, but a side effect of it is to make downloading go pretty damn slowly.

Great fun all round ;)

Alan.

For the good things: I now have memory usage in the range of 10-13 megabytes with 3 torrents running simultaenously. There's still one or two more optimisations that i have left to try. I'm at the level where calling DateTime.Now is responsible for 30% of my ongoing allocations! Ok, so i am calling it ~ 400 times a second, but that has nothing to do with it ;) Bug fixes have been flying in and most importantly (imo) i have received my first patches from other people! Yes, other people have sent me patches for MonoTorrent, amazing stuff.

The bad things: I've found some serious bugs in MS.NET which were the root cause behind the mysterious freezing i used to get in client library. What happens is that sometimes an Asynchronous call to socket.BeginReceive would actually be completed synchronously. "But thats good, it saves the computer from starting up another thead" you might think, but you'd be wrong!

My code was built on the fact that an asynchronous call was asynchronous. So, the problem is this. When dealing with multithreaded code you have to make sure that a different thread doesnt modify/delete data that your main thread is working on. To do this, you must put a "lock" on the item you're working on. Then when Thread 2 sees the lock, it wont do anything until Thread1 is finished and releases its' lock. Problems arise when Thread1 locks on item A and wants to lock onto B while Thread2 already has a lock on B but wants a lock on A. In this scenario, neither thread can go forward and so your application locks up.

Unfortunately this is exactly what happens when my socket call is completed synchronously. Thank microsoft for such a nice undocumented feature.

Finally in bad news: Download speeds are gone to crap. I'm going to have to remove my rate limiting code and get everything working hunky dorey before i reimplement rate limiting. My whole locking structure needs to be reviewed in light of the above mentioned bug. I've put in a temporary fix, but a side effect of it is to make downloading go pretty damn slowly.

Great fun all round ;)

Alan.

Subscribe to:

Posts (Atom)